2024 C21U Impact Report

Innovation

Enhancing Graduate School Admissions with AI-Powered Tools

The Online Master of Science in Analytics (OMSA), offered at Georgia Tech Professional Education (GTPE), a sister unit under Lifetime Learning, offers students a degree that combines computing, business, statistics, and research. Since 2021, C21U Jonna Lee, director of research in education, and Meryem Yilmaz Soylu, research scientist, have partnered with the program to provide tools to enhance the applicant selection process. These AI-enhanced tools help identify those applicants who would most likely successfully complete the program based on their entering skills and experiences.

Applicant Success Prediction Tools

Our research team created a model to predict an applicant’s success in the OMSA program. They defined success in two dimensions: admission (whether an applicant got admitted to the program) and academic achievement (indicated by course and overall GPA scores.) The team tested predictive models using various application data and comprehensive application features. These include processed text-based application materials data (e.g., letters of recommendation, statement of purpose documents) and continuously trained and tested various machine learning (ML) models. This rigorous process ensured the reliability and accuracy of our predictive model.

Our work in progress was presented at the Learning @ Scale conference in 2021 and 2022 and at AERA (American Educational Research Association) in 2023. These presentations showcased our research and sparked engaging discussions on the future of predictive tools in academic admissions. The team shared their findings and the potential Institute-wide implementation of the tool with the vice provost for enrollment management, Dr. Paul Kohn, and his team.

21st Century and Leadership Skills Detection in LORs

Letters of recommendation (LORs) are essential components of application packages, providing valuable insights into applicants' qualifications, characteristics, candidates' abilities, and experiences beyond test scores. But in programs with large numbers of applicants they can be very time consuming to read. Last year Georgia Tech received nearly 60,000 applications! Leveraging our AI expertise, the C21U research team developed two tools supported by ML and artificial intelligence (AI) to address this problem. These tools aim to revolutionize the application evaluation process, benefiting applicants and admissions committees.

21st-Century Skills Assessment

One tool was devised to identify 21st-century skills on LORs submitted for OMSA program applications. The skills include critical thinking and problem-solving, professionalism and work ethic, leadership and teamwork, communication, and character qualities (e.g., curious, reliable, emotionally mature, cheerful). To develop the model framework, the C21U research team scored each applicant based on how many of these words appeared in their LORs, with higher scores indicating stronger skills. They analyzed the data to understand how skills differed between accepted applicants and those not using an ML technique called the Bagging (bootstrap aggregating) classifier. This technique makes the model more robust and accurate while producing results that can project an applicant’s success in the OMSA program. The team shared their work and initial findings at the 2022 UPCEA South Region conference.

Leadership Skills Detection

Meryem, Jonna, C21U’s Adrian Gallard, digital learning data analyst, and Rushil Desa, graduate research assistant, collaborated to develop an AI-powered tool to streamline the evaluation of leadership qualities from OMSA’s LORs. To achieve this goal, they employed large language models (LLM) to detect clues for leadership skills by utilizing foundational data techniques, including building a repository of leadership terminology (such as teamwork, communication, and creativity,) preprocessing data, and training transformer-based models. The team also developed a prototype showcasing an applicant's competencies, as highlighted in these letters.

Initially developed to streamline the document review process for admissions staff, this tool ultimately aims to offer constructive feedback for the professional development of the admitted applicants. Analyzing student-generated text data, including writing assignments and peer reviews, will achieve this.

In 2023, the team showcased the model and prototype at the Affordable Degrees at Scale and the AI for Lifetime Learning conferences, and the AI for Lifetime Learning Symposium in 2024.

Discussion Forum Data Projects

Discussion forums are an essential aspect of online learning. They are more than just a place to post comments—they create an interactive, collaborative, and engaging learning experience. The C21U research team conducted a series of studies to provide tools to instructors or curriculum developers in higher education to leverage the benefits of discussion forums.

Models to Predict the Phase of Cognitive Presence and Metacognitive Processes

In cognitive presence (CP), learners actively engage in critical thinking and problem-solving to construct meaning and understanding. It's how learners interact with the content, each other, and the instructor, which is essential in online learning and beyond. In the Community of Inquiry (Col) framework, teaching, cognitive, and social presences must work together for online learning to be effective. A learner needs good teaching to guide them (teaching presence), a sense of community with their classmates (social presence), and active engagement with the material (cognitive presence) to learn and grow.

From 2021 to 2022, C21U researchers worked towards a strategy to measure and enhance, at scale, the quality of interactions in discussion forums. The team collected and processed discussion threads and time stamp structures from two online courses: Georgia Tech’s MOOC (Massive Open Online Course) in introductory computer programming and a course from the Online Master of Science in Computer Science (OMSCS) program in artificial intelligence based on the community of inquiry framework to explore trends in cognitive presence.

In 2022, under Jonna’s supervision, the team created an ML model that detects the phase of cognitive presence (1-Triggering Event, 2-Exploration, 3-Integration, 4-Resolution) exhibited by a student’s post. Traditionally, Cognitive Presence in course discussions has been determined only after a course ends, either by a subjective survey, or a labor-intensive qualitative analysis of the course discussion logs. This research effort aims to provide instructors with an automated near-real time analysis of cognitive presence, so that they can adapt their courses on the fly to enhance meaningful student engagement. The team also provided future applications of such a model to help online students develop higher-order thinking. The study was published in the Online Learning Journal.

Metacognitive processes (MP) involve the 'thinking about thinking' aspect of learning. By planning, monitoring, and evaluating their learning, learners can identify areas for improvement and adjust their approach accordingly. It's akin to having a roadmap and a GPS to guide learners on their learning journey, helping them reach their destination more efficiently. Engaging in these metacognitive processes enables learners to become more self-aware and autonomous in their learning. They develop the ability to plan strategically, monitor their progress effectively, and critically evaluate their learning outcomes. This enhances their academic performance and fosters lifetime learning skills that can be applied in various contexts.

Expanding on our cognitive presence research, in 2023, C21U’s research team investigated how students employ metacognitive processes while engaging in an online discussion forum. Furthermore, we explored the connections between MP and CP and their potential impact on course performance.

This study has significant implications for designing online discussion activities, especially in MOOC environments, where additional support for self-regulated learning is crucial for success. Meryem presented this research at the 2023 UPCEA DT&L SOLA+R conference.

Course Activities and Learning Progress

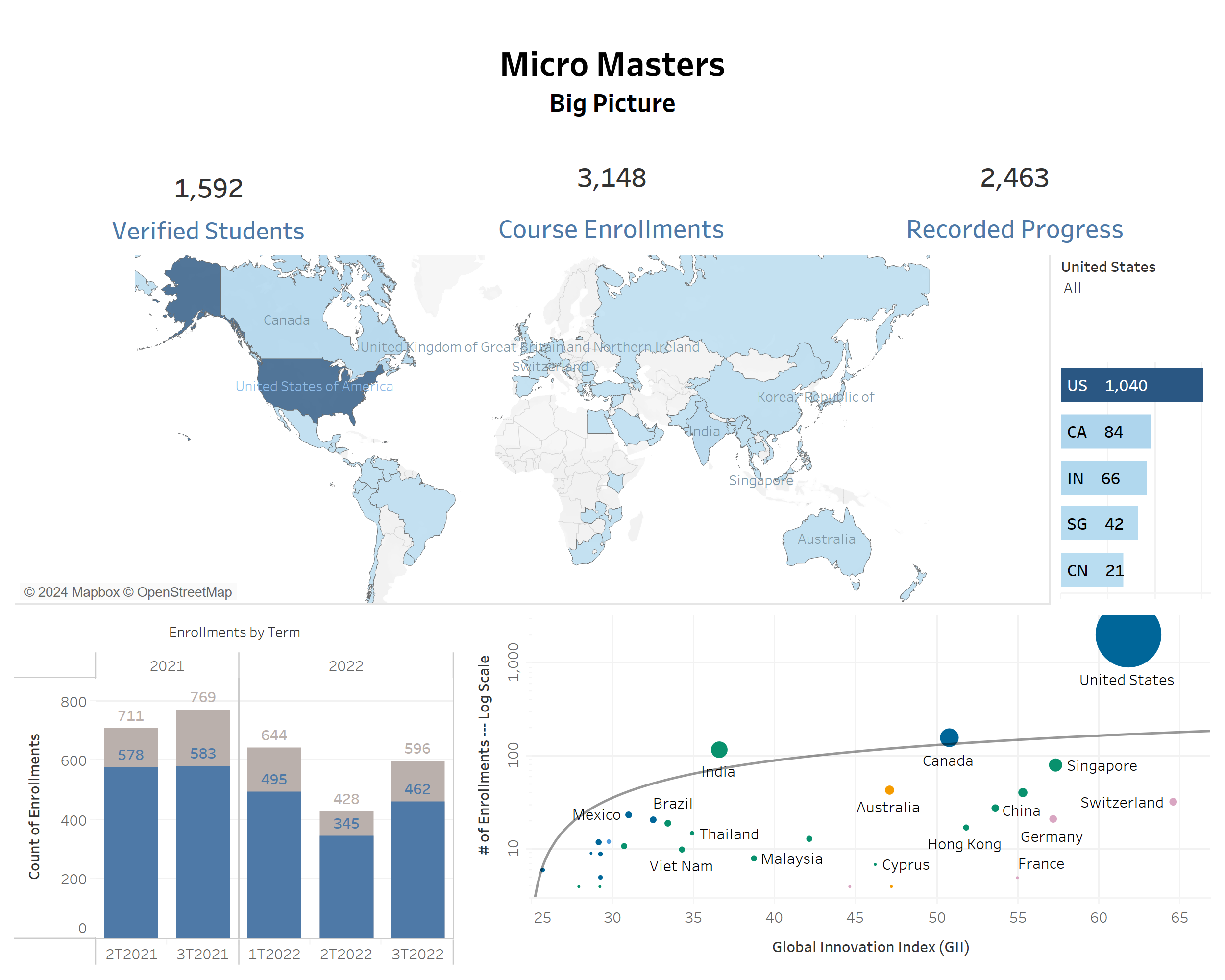

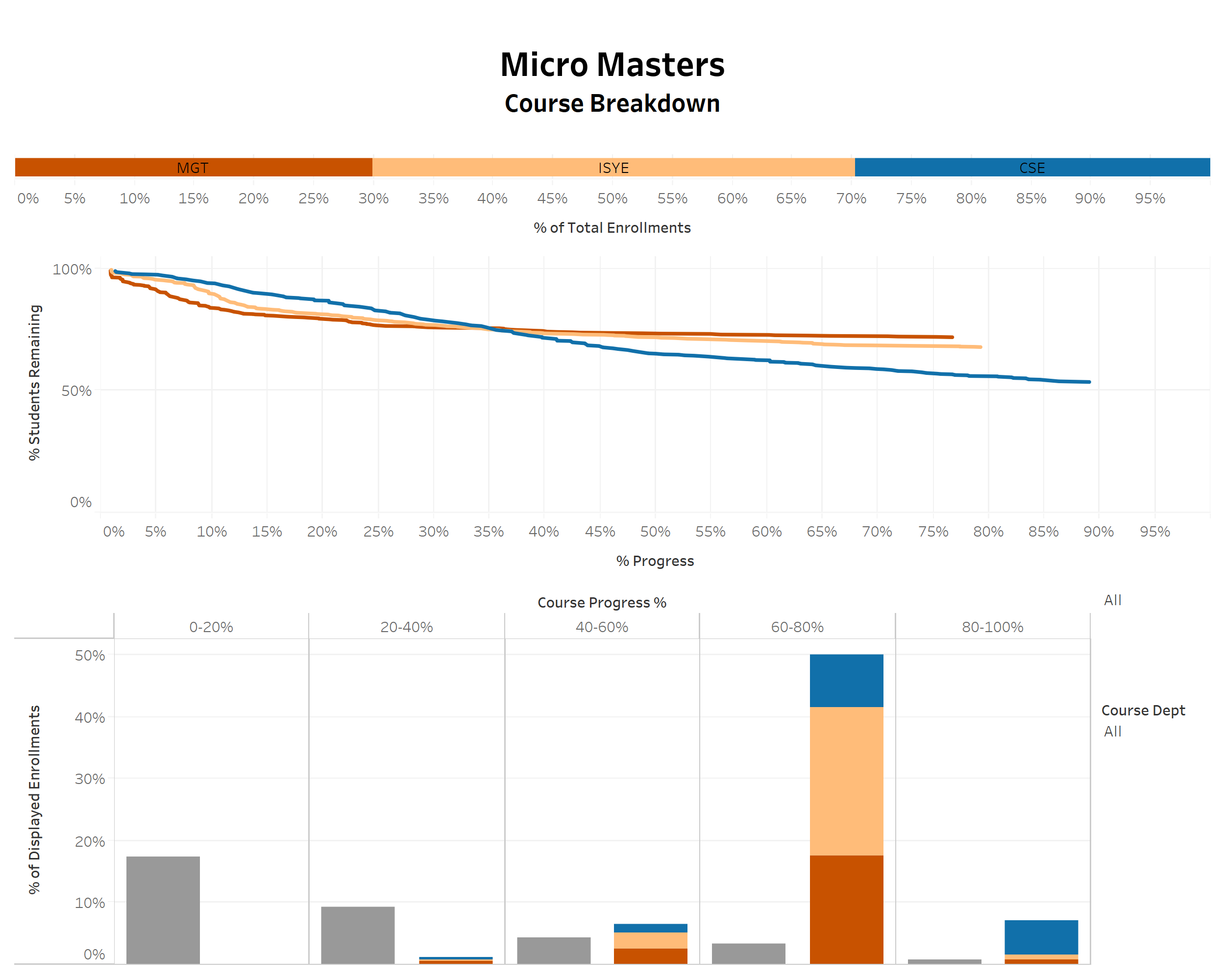

To understand the relationship between course activities and learning progress among students enrolled in the Georga Tech Professional Education (GTPE) MicroMasters certificate program offered in an affordable MOOC-based learning platform the C21U research team used machine learning (ML) techniques on the clickstream data to examine the differences between the engagement patterns of learners in both programs. The study, conducted from 2022 to 2023, further supports optimizing the program’s design to enhance learners’ engagement and improve the overall completion rates. This work was published in the Journal of Research on Technology in Education.

Additionally, the team examined large data sets generated from the program to visually profile students' demographics and actions from enrollment to course completion to provide insights into students' persistence, progress, and success patterns. This study provides illustrative examples of data-driven practices to understand the needs of mid-career professionals and lifelong learners. It also informs areas of opportunities to support student engagement and success in an online micro-credential program environment. The team shared their findings at the 2023 OLC Innovate and Affordable Degrees at Scale conferences.

ChatGPT Affordance Study

Launched in November 2022, ChatGPT is an advanced large language model (LLM) developed by OpenAI. This generative artificial intelligence (AI) chatbot was trained on massive amounts of text data from the internet using machine learning algorithms. Considerable research has focused on the uses and effects of ChatGPT in education, but not much has been done about its impact on adult students' learning processes and outcomes. Because understanding the role and influence of such fast-evolving technologies on these groups of learners is crucial, since the summer of 2023, the C21U research team has been working on a study to explore how students utilize and perceive ChatGPT's capabilities in learning scenarios. Additionally, they seek to uncover the factors influencing, both positively and negatively, students' engagement and learning experiences with the chatbot.

To conduct this study, the researchers invited participants to interact with ChatGPT by completing two learning tasks: writing a persuasive essay and reviewing integral calculus concepts. They followed this activity with interview questions that helped the participants reflect on their learning experience with the chatbot. While the research data collecting and analysis is still in process, preliminary findings suggest that students perceive and use affordances of ChatGPT differently by task type. They also observed that participants’ sense of agency with their learning process is vital in increasing engagement with the AI tool. When published, the study will provide valuable implications for educators, stressing the need to empower students to actively recognize and apply the affordances of generative AI tools in their self-directed learning.

VR/AR Readiness in Education

To encourage and facilitate educational institutions to harness the advantages of VR technology and enrich the learning experience for their students, in 2023, the C21U research team, and Lina Kim, graduate research assistant, conducted a comprehensive survey to examine students’ VR readiness by assessing students' familiarity with VR/AR technology, their confidence in using it, and their understanding of its unique features. The survey also explored students' perceptions and expectations concerning VR's potential benefits and applications in education.

To study a real-world scenario, our research team implemented a VR activity into different Tech multimodal literacy class sessions in collaboration with Zita Hüsing, a Marion L. Brittain Postdoctoral Fellow and assistant director of writing and communication at the Ivan Allen College of Liberal Arts. They also invited a select group of survey participants to engage in a VR immersive experience. During these sessions, participants completed tasks, allowing the team to observe their interaction with the technology. In-depth interviews followed to delve deeper into the students’ experiences. One of the highlights of these sessions was that participants consistently praised the interactive and immersive elements. For instance, one particularly enjoyed the tactile feedback while drawing in GTRecRoom, a public VR app developed by Aviv Alexander Loewenstein, a former Georgia Tech VIP student. The participant explained, “It provided vibrating feedback when touching the board to draw, which made it more intuitive.” However, some expressed the desire to improve the VR application/device to incorporate more interactive components to simulate real-world senses better. One described, “The controls weren't as interactive as I would have liked them to be.”

In 2024, our research team presented their findings at the AERA Annual Meeting, the USG Teaching and Learning Conference, and the iLRN: 10th International Conference of the Immersive Learning Research Network. Meryem explained the importance of sharing with peers what they learned in this study, “Our presentations highlighted the crucial significance of VR readiness in educational settings and offered practical guidance on integrating this technology into classroom learning. Additionally, we discussed methods for assessing student learning within VR environments, providing attendees with valuable insights into the benefits of immersive technology in education.”